OpenAI acquisition of Neptune aims to boost research tools for frontier models

In a move to deepen its research infrastructure, OpenAI acquisition of Neptune marks a strategic step to enhance monitoring and understanding of frontier AI models.

OpenAI moves to acquire Neptune

OpenAI has entered into a definitive agreement to acquire neptune.ai, a platform focused on experiment tracking and training analytics for advanced models. This deal is designed to strengthen the tools and infrastructure that underpin progress in frontier research. Although financial terms, such as any neptune ai acquisition price, have not been disclosed, the strategic intent is clear.

Training state-of-the-art AI systems is a highly creative and exploratory process. However, it also depends on observing how a model evolves in real time. Neptune offers researchers a dependable way to track experiments, monitor training runs, and interpret complex model behavior as it unfolds.

How Neptune supports model development

From its inception, the Neptune team has focused on supporting the hands-on, iterative work of model development. Moreover, the platform is built to help researchers navigate long and complex training workflows without losing visibility into critical metrics.

More recently, Neptune has worked closely with OpenAI to develop tools that allow scientists to compare thousands of runs and analyze metrics across model layers. That said, the collaboration also aims to surface issues earlier in the training pipeline, improving reliability and efficiency for large-scale systems.

Neptune’s depth in experiment tracking and training analytics will help OpenAI move faster, learn more from each experiment, and make better decisions throughout the training process. This directly supports frontier model monitoring and helps optimize how data, architecture choices, and training schedules interact.

Deeper integration into OpenAI’s training stack

OpenAI has indicated that this transaction is about more than a simple neptune ai acquisition; it is about long-term integration. In one of the first public comments, OpenAI’s Chief Scientist highlighted how Neptune’s system strengthens their internal workflows.

“Neptune has built a fast, precise system that allows researchers to analyze complex training workflows,” said OpenAI’s Chief Scientist. “We plan to iterate with them to integrate their tools deep into our training stack to expand our visibility into how models learn.” This vision underscores how research infrastructure tools will sit at the core of OpenAI’s future training pipelines.

The founder and CEO of Neptune echoed this sentiment, framing the deal as a chance to scale their impact. “This is an exciting step for us. We have always believed that good tools help researchers do their best work. Joining OpenAI gives us the chance to bring that belief to a new scale.” However, the companies have not yet provided a specific timeline for closing the transaction.

Implications for frontier research

As frontier AI models grow larger and more complex, model training tools become critical to safety and performance. By bringing Neptune in-house, OpenAI aims to tighten the feedback loop between experimentation, monitoring, and deployment. This could, over time, influence how the broader ecosystem thinks about observability in large-scale AI systems.

The announcement did not mention topics such as any elon musk openai acquisition bid or other high-profile disputes around earlier investments. Instead, it focused squarely on the technical and research implications of the deal. Moreover, there was no reference to other rumored transactions, including any openai windsurf acquisition or potential hardware-focused partnerships.

Both companies emphasized that they are looking ahead to the next chapter of training tools. With this deal, OpenAI and Neptune plan to co-develop deeper analytics, richer experiment tracking, and better visibility into how frontier models learn in practice. We will likely see the first visible outcomes of this collaboration in upcoming training runs announced after 2024.

In summary, the agreement between OpenAI and Neptune represents a targeted bet on better infrastructure for frontier AI research. While financial details remain undisclosed, the combination of experiment tracking expertise and large-scale model development could significantly shape the next generation of training workflows.

Ayrıca Şunları da Beğenebilirsiniz

Is Putnam Global Technology A (PGTAX) a strong mutual fund pick right now?

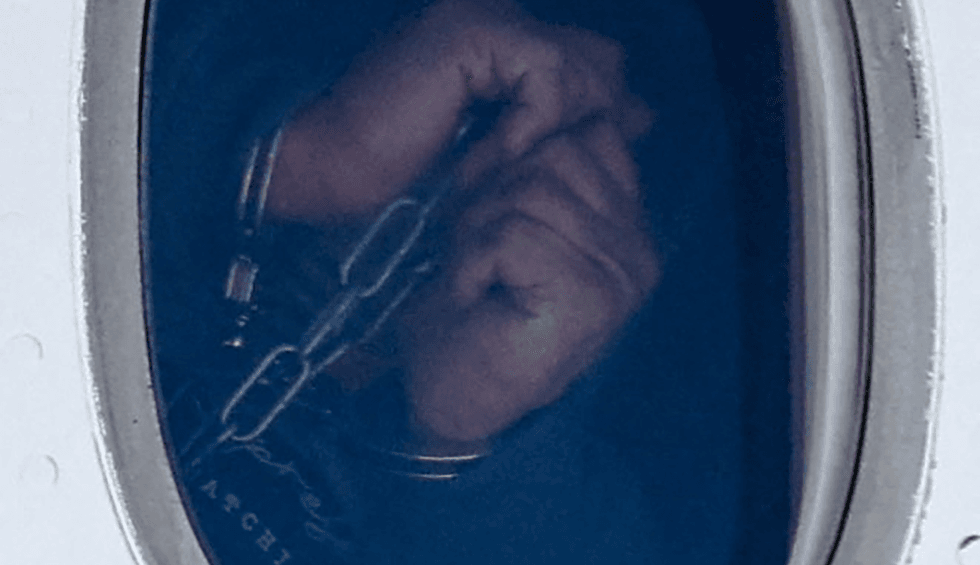

US-wed Irishman with no criminal record detained for months in 'traumatizing' conditions