Why ‘Throwing Away’ Half Your Embeddings Improves Search

Developers often focus on building bigger, more complex models to solve performance challenges. But what if your current embeddings already contain everything you need for dramatically better search results? What if the path to 14% better performance isn’t through GPT-5 or a billion-parameter embedding model, but through intelligently using just 40% of the dimensions you already have?

When we first encountered Dimension Importance Estimation (DIME), a theoretical technique recently proposed by data scientists from the Universities of Padua and Pisa that proposed improving dense retrieval performance by simply ignoring large portions of embedding vectors, we were a bit skeptical. No retraining, no new models, just selectively masking dimensions. We had to verify things for ourselves.

Our investigation turned into a comprehensive study that not only reproduced the original results but uncovered why DIME works so effectively. Along the way, we developed improvements that push the technique even further. Here’s what we learned and how you can use it.

Understanding the Original DIME Insight

The original DIME framework starts from a simple observation: not all dimensions in a dense embedding contribute equally to retrieval quality. For any given query, some dimensions carry strong semantic signals while others add noise. DIME estimates which dimensions matter most for each specific query and temporarily masks the rest.

Our Reproducibility Journey

We began by meticulously reproducing the original experiments using ANCE, Contriever, and TAS-B models on MS MARCO and TREC collections. Our reproduction confirmed the reported improvements, with some minimal variations. On MS MARCO Deep Learning 2019, we observed up to 11% improvement in nDCG@10 when using Contriever with LLM DIME, closely matching the original findings. TREC Robust 2004 showed 7-8% improvements across models.

The reproduction process revealed interesting nuances. While most results aligned closely with the original paper, we noticed that the exact improvement percentages could vary slightly based on implementation details. This reinforced the importance of careful reproduction studies in validating novel techniques.

But Why Does DIME Work?

The original DIME paper demonstrated strong empirical results but left theoretical questions unanswered. Why does masking dimensions improve retrieval? We developed a mathematical framework to explain this phenomenon.

We modelled each document embedding as the sum of a signal component (representing the true information need) and noise. When aggregating top-k documents through pseudo-relevance feedback, the signal concentrates while noise averages out according to the law of large numbers. By selecting dimensions where the query strongly aligns with this aggregated signal, DIME essentially performs denoising.

Using Chebyshev’s inequality, we proved that the error bound for dimension selection tightens as more feedback documents are used. This explains why even simple PRF with just one or two documents can provide substantial improvements. The mathematics revealed that DIME works by improving the signal-to-noise ratio in the embedding space.

Testing DIME’s Limits

Armed with theoretical understanding, we expanded DIME’s evaluation beyond the original settings. We tested newer embedding models including BGE-M3, mxbai, e5-large, and arctic-v2. These models use different training objectives and architectures, yet DIME consistently improved their performance.

BGE-M3, for instance, showed 12.1% improvement on MS MARCO DL’19 when retaining only 40% of dimensions. Arctic-v2, despite being optimized for cosine similarity rather than dot product, benefited similarly after proper normalization. This broad applicability suggests that dimension redundancy is a general characteristic of dense embeddings, not specific to certain architectures.

We also evaluated DIME on the entire BEIR benchmark for zero-shot retrieval. The results were particularly striking on challenging datasets. ArguAna saw 14% improvement with TAS-B when using just 20% of dimensions. Even on datasets where models already performed well, like Quora, DIME maintained or slightly improved performance without degradation.

Beyond GPT-4: Making LLM DIME Practical

The original LLM DIME relied exclusively on GPT-4, which poses cost and latency challenges for production systems. We systematically evaluated alternative language models ranging from 3B to 32B parameters. Qwen 2.5 models across different sizes all provided improvements, with the 32B variant approaching GPT-4’s effectiveness. Llama 3.1 and 3.2 models also performed well, with the 8B version offering an excellent balance of quality and efficiency.

Interestingly, reasoning-optimized models like DeepSeek R1 underperformed despite their sophistication. These models produce shorter, more focused responses that lack the rich semantic content needed for effective dimension discrimination.

This finding highlights that more advanced models aren’t always better for every task.

Our Improvements: Weighted Averaging and Reranking

Our theoretical analysis suggested that treating all feedback documents equally might be suboptimal. Documents with higher relevance scores likely express the information need more strongly. We developed a softmax-weighted centroid approach that assigns higher weights to more relevant documents:

def weighted_centroid(docs, scores, temperature=0.1):

weights = np.exp(scores / temperature)

weights = weights / weights.sum()

return np.sum([w * doc.embedding for w, doc in zip(weights, docs)], axis=0)

This modification consistently outperformed uniform averaging across all tested datasets. The temperature parameter controls weight concentration, with lower values focusing more heavily on top-ranked documents.

We also explored using DIME for reranking rather than re-retrieval. By applying dimension importance estimation to the top 100 initially retrieved documents, we can refine their scores without issuing a second query. This approach maintains the high recall of the initial retrieval while improving ranking precision, making it particularly suitable for production systems where latency is critical.

Practical Implementation Insights

Through extensive experimentation, we’ve identified key factors for successful DIME deployment. Start conservatively by retaining 60-80% of dimensions, then gradually reduce based on your specific data. Weighted averaging using the top 10 documents or PRF DIME with k=5 feedback documents provides a good balance of effectiveness and robustness. For most applications, this simple approach yields significant improvements without external dependencies.

Model normalization requires attention. Since DIME uses dot products for dimension scoring, models optimized for cosine similarity need vector normalization before applying the technique. This doesn’t diminish DIME’s effectiveness but is crucial for correct implementation.

The choice between PRF and LLM variants depends on your constraints. PRF DIME adds minimal latency and works entirely within your existing retrieval pipeline. LLM DIME can provide larger improvements, especially on complex queries, but requires additional infrastructure and accepts higher latency. Our experiments show that even small open-source LLMs can be effective, making this approach more accessible than when limited to GPT-4.

Rethinking Vector Search Optimization

Our journey from skepticism to validation revealed something profound about the current state of vector search: we’re likely using our embeddings inefficiently. This isn’t an indictment of existing models—it’s an opportunity.

The implications extend beyond DIME itself. If simply masking dimensions can yield such improvements, what other optimizations are hiding in plain sight? How many organizations are throwing computational resources at problems that could be solved through better understanding of their existing systems?

So what can you do now to see real impact based on these findings?

- Audit your current embedding usage: are you using all dimensions effectively?

- Experiment with dimension reduction on your existing models before considering upgrades

- Question the assumption that more dimensions always mean better results

- Consider how post-training optimizations could improve other parts of your ML pipeline

The complete implementation is available at github.com/pinecone-io/unveiling-dime. But more importantly, we hope this research inspires you to look at your vector search systems differently. In high-dimensional spaces, intelligence isn’t about using everything. It’s about knowing what to use when.

The answer to improving your search system might already be there, hiding in plain sight.

–

Cesare Campagnano Cesare earned his Ph.D. from Sapienza University of Rome, under Gabriele Tolomei and Fabrizio Silvestri, during which he also interned at Amazon. Afterward, he held a PostDoc position at the same institution. Cesare’s research interests revolve around Large Language Models and Information Retrieval.

Antonio Mallia Antonio is a former Staff Research Scientist at Pinecone. Prior to that, he was an Applied Scientist on Amazon’s Artificial General Intelligence (AGI) team, working on large-scale web search.

He holds a Ph.D. in Computer Science from New York University, where he studied under Professor Torsten Suel, focusing on enhancing the effectiveness and efficiency of Information Retrieval systems.

Antonio has published extensively in top-tier conferences, including SIGIR, ECIR, EMNLP, CIKM, and WSDM. He also serves as a program committee member and reviewer for major IR and NLP conferences.

You May Also Like

MoneyGram launches stablecoin-powered app in Colombia

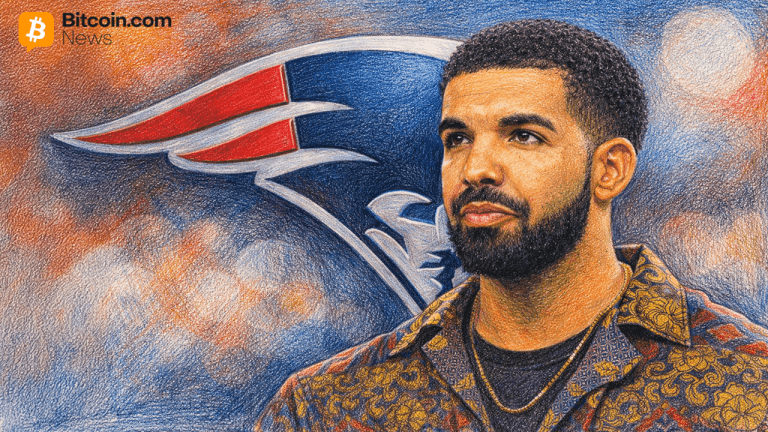

Rap Star Drake Uses Stake to Wager $1M in Bitcoin on Patriots Despite Super Bowl LX Odds